We live in a world with an ever-growing wealth of data, much of it available on the open web. Data also continues to accumulate in deep and dark places of the internet. Web data extraction allows companies in different industries to monitor relevant information on the open, deep, and dark web. They can use different types of web data — usually through web data integration platforms — to generate actionable insights automatically at scale.

We’ve created this guide to explain what web data extraction is, ways to extract web data, use cases for web data extraction, and the data feeds we offer.

What is web data extraction?

Web data extraction is the process of extracting, transforming, and unifying data from web pages into structured, machine-readable formats. It sometimes involves enriching the extracted data with attributes such as entities, sentiment, types, and categories. This structured data is used for specific business use cases or research purposes.

For example, a venture capital company could use web data extraction to gather data from websites with consumer reviews or online discussions. A team would analyze the data to discover shifts in public opinion about a specific company and predict future performance. VC leaders could then factor performance predictions into their decision on whether to invest.

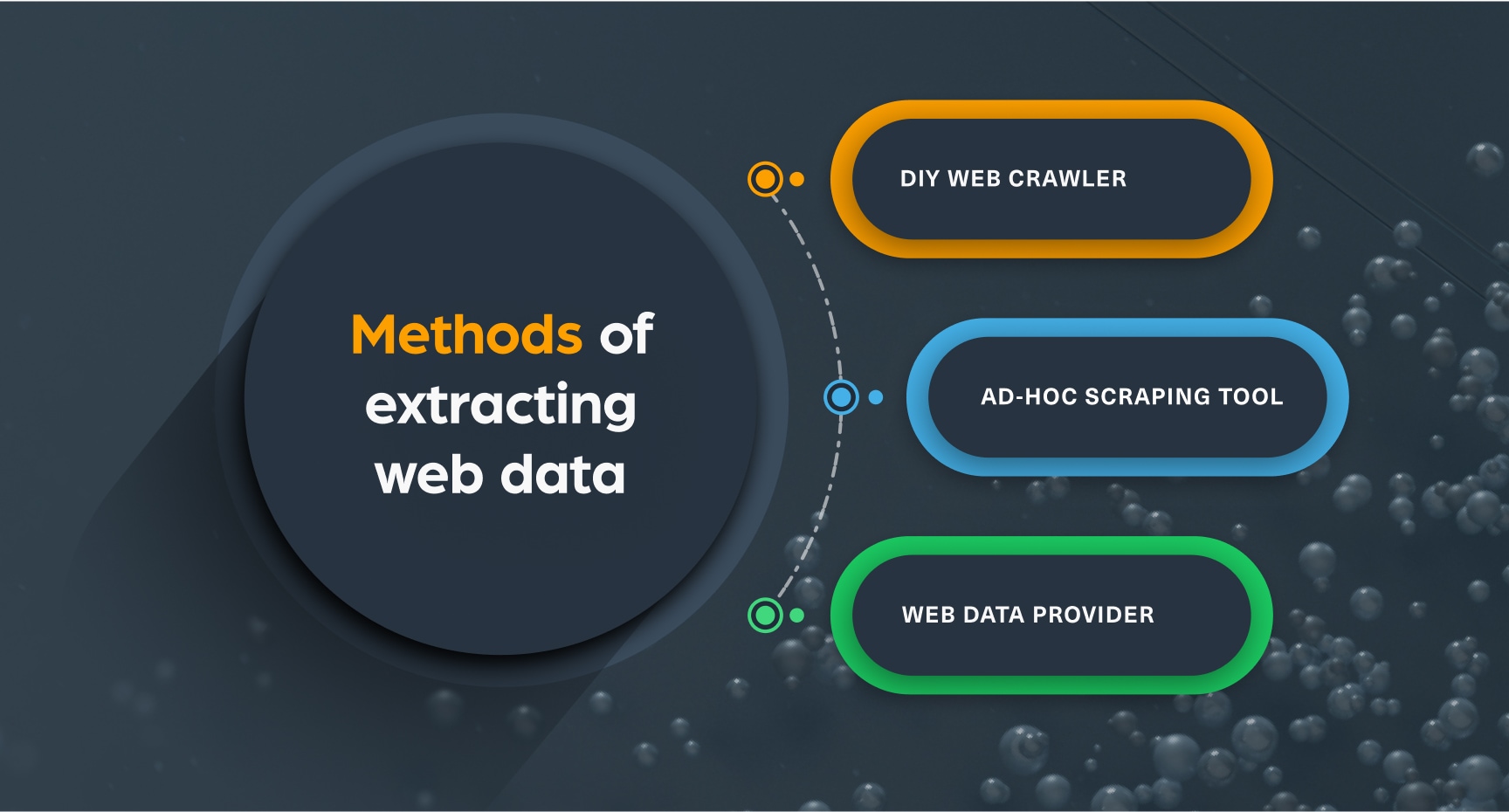

Methods of extracting web data

The three most common ways to extract data from web pages include:

DIY web crawler

The DIY approach means you build a web crawler in-house using your preferred language, e.g., Python, Ruby, or JavaScript. This approach gives you complete control – you choose how much data to scrape and how often to scrape it. A DIY web crawler requires technical skills, so companies usually turn to web developers to build it. We discuss the DIY approach to web data extraction in this white paper.

Ad-hoc scraping tool

Many ad-hoc web scraping tools are available today, with prices and features varying widely. These tools automate parts of the extraction process. Some tools consist of basic automated scripts, while others use advanced technologies like machine learning. Some of them require developer involvement — e.g., to manage lists of websites to crawl and maintain the scraping tool. Ad-hoc scraping tools don’t scale well, and many include more features than you need, making them a less cost-effective option for most projects.

Web data provider

A web data provider, also known as a Data as a Service (DaaS) provider, allows you to extract web data without having to build any infrastructure or a web scraping system. You instead purchase the web data you need for your platform or application. DaaS solutions provide broader data coverage and far greater scalability than ad-hoc scraping solutions.

Most DaaS vendors provide data feeds through APIs, making integrating web data with platforms and applications easy. While DaaS solutions offer scalability for larger operations, you typically need to work with the DaaS provider to customize the data feeds.

To learn more about which extraction approach would work best for your business, download our Web Data Extraction Playbook.

Use cases for web data extraction

You can use web data extraction to obtain relevant data for a wide range of use cases, such as:

Brand monitoring

You can achieve many business goals through web data extraction for brand monitoring, such as:

- Create effective influencer marketing campaigns — You can use web data from news and social media sites to see which influencers directly impact your brand and to find worthy influencers for your marketing campaigns.

- Maintain your company’s reputation — You can track mentions on social media and blogs containing product complaints or brand criticisms and then proactively address them based on the web data.

- Effectively manage a crisis — When a public relations crisis occurs, you can track online activity and consumer sentiment to see how the public reacts. You can also identify key reporters and influencers talking about it. You can take data-driven steps to mitigate the damage to your brand.

- Identify new consumer trends — Brand monitoring allows you to identify emerging trends and better understand the needs and wants of consumers. This knowledge can help you create better products and services for your customers.

- Benchmark performance against competitors — Use brand monitoring to benchmark your performance against your rivals. You can perform benchmark analysis using public competitor data, such as media mentions, search engine rankings, and website traffic.

Other things you can do with brand monitoring include gauging consumer sentiment, improving engagement with customers, and identifying user-generated content involving your brand.

Competitive intelligence

Traditional and alternative web data contain hidden signals and insights, giving companies a knowledge advantage that enables them to:

- Identify the strengths and weaknesses of competitors, creating targeted business strategies.

- Discover current and future opportunities in the market that will help them increase their market share.

- Better understand global or regional events that could positively or negatively impact their industry position.

- Adapt a forward-thinking approach to strategic planning, ensuring the company stays well ahead of competitors.

- Improve product development and distribution, and better plan product launches.

- Create ESG data-driven investment strategies and accurate predictive financial models.

This is not a comprehensive list — you can do even more with access to a wide range of web data for competitive intelligence.

Market and product research

Outmaneuvering your competitors requires effective market and product research, which means conducting research not only with Google searches but also by using open web data. Extract relevant data from online review sites, blogs, and forums to discover how customers view your products. Learn how they feel about product changes, value for the price, and overall satisfaction. You can also use open web data to perform market research, such as monitoring pricing trends over time, keeping an eye on your competitors, and determining consumer demand for specific products.

Sentiment analysis

If you want your business to succeed, you need to know how your customers feel about your brand. Many customers express their thoughts about nearly everything online, including products and services. You could leverage that public data for sentiment analysis, using it to achieve various business goals. For example, a restaurant chain could analyze user-generated content that mentions elements of the customer dining experience, such as food quality, service, value for the price, locations, and overall ambiance. Armed with relevant open web data, the chain could provide an even better experience for diners leading to more returning customers and higher revenue.

News aggregation

Breaking news and current events — local and global — can potentially impact your business positively or negatively. You can use web data extraction to monitor news content for specific keywords or create a personalized news feed based on your interests. For example, an investment firm could monitor news content for keywords related to a current global economic recession. The data would provide valuable insights that help investors determine opportunities or risks regarding investing in specific companies or markets.

Compliance risk monitoring

Organizations must effectively track new and changing regulations or they increase their risk of compliance failures. For example, organizations worldwide face potential Anti-Money Laundering (AML) and Know Your Customer/Business (KYC/B) compliance violations, which can lead to financial fines amounting to millions of dollars. By extracting data from relevant public government websites, companies can continuously track changes in laws like KYC/B and AML. They can better monitor compliance risk and ensure they comply with current regulatory requirements, avoiding hefty financial penalties. Organizations should also monitor public data about different companies, analyzing the data against existing law. For example, a financial services provider might discover a competitor currently faces AML fines or a sneaker brand might find a rival embroiled in a government-led legal case.

Digital risk protection

Every company operating online faces a wide range of digital threats, which includes data breaches, phishing attacks, cloud-based service attacks, and ransomware. When bad actors succeed in breaching systems or applications, they typically sell or trade companies’ sensitive data via dark web hacker forums, chat apps, or paste sites. Many hackers will plan cybersecurity attacks far in advance, discussing their plans with others on the dark web. By monitoring dark web data, you can discover digital threats to your business early and identify new and emerging trends in cybercriminal circles.

Threat intelligence

Companies today face many threats from outside and within the business. For example, a stock trading business could find a malicious insider selling sensitive company information to third parties or extremists on alternative social media sites making threats against executives and VIPs. Corporate travelers risk exposure to threats due to crime or terrorism at their destination. You could extract data from dark web sources to discover leaked company information. And using data from sites across the deep, dark, and open web, you could detect threats to high-risk executives and create travel and site security assessments for corporate travelers.

Specialty platforms and web data products need to incorporate varied web data, and lots of it, for their customers to succeed. And companies worldwide use Webz.io as their go-to source for structured data from the open, deep, and dark web.

Webz.io web data APIs

Webz.io collects data from open, dark, and deep web sources. We provide this data in the form of feeds, most of which we make available through REST APIs. Using our APIs, platforms, and applications can generate relevant insights at scale. Here are brief overviews of our API products:

Open web APIs

- News API — Use this feed for news aggregation, brand monitoring, sentiment analysis, and competitive intelligence. Our feeds provide data from millions of daily news articles and enrich the data with smart entities like sentiment and type. You get access to news sources in 170+ languages going back to 2008. The News API uses an Adaptive Crawler, a proprietary technology we created to increase the number (it doubled!) of news articles we gather daily.

- Blogs API — This feed works great for sentiment analysis, market and product research, brand monitoring, and competitive intelligence. You get current data from blogs across the globe, and our platform enriches the data with smart entities, sentiment, and categories. The API provides live and historical blog data in 170+ languages.

- Forums API — This API provides relevant contextual information from forums across the open web that you can use for sentiment analysis, market and product research, brand monitoring, and competitive intelligence. Every day, Webz.io crawls millions of forum posts from across the globe. You get access to newly released posts and posts going back to 2008 in 170+ languages.

- Reviews API — Use this API for market and product research, product management, and brand monitoring. You get access to live and historical reviews data in multiple languages from review sites around the world.

- Gov Data API — This feed works incredibly well for compliance risk monitoring, investment intelligence, and competitor intelligence. Other ideal use cases include business due diligence, watchlist screening, and identifying third-party and supply chain risks. The Gov Data API gives you access to global governmental data going back at least five years. The data available includes governmental regulations, enforcement, ESG data, sanction lists, and corporate filings.

Archived Web Data API — This API is ideal for AI modeling, where you need a massive volume of web data to train AI or NLP models. The API provides access to historical data from news, blogs, online forums, and reviews across the open web going back to 2008.

Dark and deep web APIs

- Dark Web API — The Dark Web API is ideal for brand protection, digital risk protection, threat Intelligence, and fraud detection. The feed extracts password-protected and encrypted content from deep and dark web sources. It also tracks extremist social media posts and hacking posts.

- Data Breach Detection API — Use this API for digital risk protection, brand protection, fraud protection, and VIP protection. This feed helps you discover leaked data available on sites across the deep and dark web. The feed crawls sites indexed by attributes such as credit card, domain, BIN, SSN, and email.

Get the most from web data

Web data can help you and your customers address a wide range of concerns — from thriving in a highly competitive market and increasing brand loyalty to protection from cybersecurity threats and staying on top of compliance risks. With Webz.io, you can provide the scalable big data or specialty technology solution your customers need. And getting started with web data extraction is easy — pick a Webz.io API (or multiple APIs) for your use case, plug it into your platform or solution, and go!

Want to know how Webz.io can help you make the most of web data extraction? Contact us to speak with one of our web data experts (or DaaS experts).