Optimize LLM Data Preprocessing with Structured Historical Web Data

Want to optimize and scale data preprocessing for your large language model (LLM)? Read our blog post to find out how. Hint: structured historical web data.

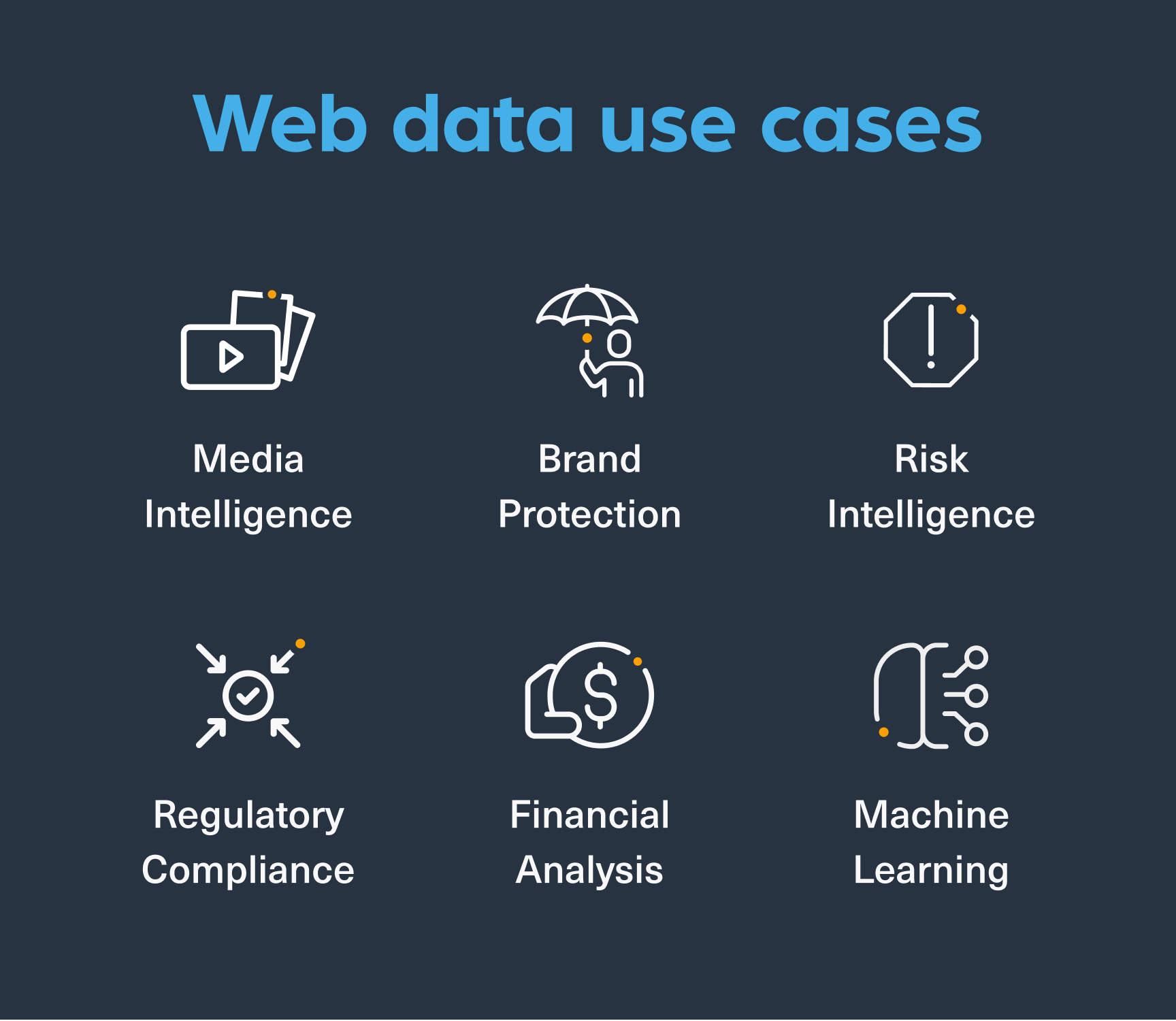

Every team in your business needs fast and accurate insights to make decisions that will help the company succeed. For this reason, companies across industries — from media intelligence to risk management — have turned to web data integration platforms for automated insights. These platforms require a lot of data, especially web data, to perform well. We’ve created this guide to explain what web data is, the use cases for it, and the web data options available today.

Web data is information sourced and structured from various sites across the Internet. Types of web data include:

We often see confusion around the terms “dark web data” and “deep web data,” so we’ve created an article to explain the differences between these two terms. You can use one or multiple types of web data to gain valuable insights for your business.

You can use web data for a wide range of use cases, such as:

Whatever the use case, you first need to find an efficient and cost-effective way to obtain relevant web data.

If you want to leverage web data, the first big challenge is figuring out how to get it. Should you build your own web crawling system, or is it better to buy a ready-made solution? Many companies also struggle with data literacy – having the right skills to make sense of web data. According to Gartner, most chief data officers will fall short in improving data literacy across their teams through 2025, making it even harder to turn web data into actionable insights.

Start Scaling With Big Web Data

Building your own web crawling solution gives you complete control over how you collect and process data, but it’s a major investment. Developing and maintaining an in-house system takes time, money, and a highly skilled team. Since your data needs to grow, scaling becomes increasingly complex and expensive. Plus, staying compliant with data privacy regulations that are constantly changing means constant updates and monitoring. If you go this route, be prepared for a long-term commitment with ongoing costs and challenges.

Buying a third-party web data solution is the faster, more scalable option. With a ready-made platform, you don’t have to worry about development, maintenance, or infrastructure costs. Leading providers offer tested solutions that can handle massive data volumes while ensuring compliance with industry regulations. The downside? You’ll have less control over how data is collected and may become dependent on vendor-specific technology.

Beyond choosing between building or buying, you’ll also need to address issues like data privacy, security, and integration. With increasing regulations and growing cyber threats, protecting collected data is more critical than ever. You’ll also need to integrate web data with your existing systems while ensuring accuracy and consistency. And, of course, data quality matters – if your data is full of errors or gaps, your insights won’t be reliable.

To make web data work for you, you need a strategy that balances cost, control, and expertise. Whether you build or buy, the key is finding a solution that delivers the insights you need without unnecessary risk or complexity. We cover this topic in more detail in this white paper.

If you’re using machine learning or large language models (LLMs), structured web data isn’t just helpful – it’s essential. Unlike raw, unstructured data, structured web data is already categorized and formatted, saving you hours of cleaning and prepping before analysis.

For AI-driven applications, structured data eliminates inconsistencies, reduces bias, and improves accuracy. It allows you to extract high-quality insights at scale, making everything from risk assessments to financial forecasting more precise. Plus, structured data is easier to retrieve and index, which means you can integrate real-time information into your models and decision-making processes without delays.

Efficiency is another major advantage. Without structured data, you’ll need to standardize datasets before they’re even usable. By leveraging structured web data, you can train models faster, cut down on operational costs, and generate insights with more confidence.

Looking ahead, companies that prioritize structured web data will gain a clear edge. Whether you’re building AI models, analyzing market trends, or ensuring regulatory compliance, structured data helps you scale, stay accurate, and get results – without unnecessary complexity.

If you want to buy a web data solution, you have two types to choose from: ad-hoc web scraping or web data feeds via APIs. The solution that will work best for you depends on how much data and scalability you need. For example, a large enterprise would need to leverage massive volumes of web data from different sources. In general, ad-hoc web scraping solutions are designed for small-scale data projects. The ad-hoc solution provides web data based on a list of websites the customer has given the data provider.

On the other hand, a solution that uses high-speed web feeds with flows of different data types would work well for the enterprise. Web data feeds provided through APIs allow businesses to access ongoing scalable flows of web data from numerous websites. They enable companies to generate insights from web data at scale.

The Webz.io platform gathers data from sources across the open, dark, and deep web. We provide this data in the form of feeds which you can integrate into other platforms using our data feed APIs. Our web data feeds allow platforms to generate relevant insights at scale. Here are brief overviews of our API products:

Today, web data plays a vital role in decision-making and risk protection for many businesses. But what does the future look like for web data as a whole? In the future, we expect to see the following:

How companies use web data will continue to evolve, and so will solutions for obtaining it.

Want to learn more about how to use web data effectively? Contact us today to talk with one of our data experts.

Want to optimize and scale data preprocessing for your large language model (LLM)? Read our blog post to find out how. Hint: structured historical web data.

Large Language Models like ChatGPT, and BERT need huge and quality datasets. Here's what their datasets should include.

Structured web data can help you optimize and scale data preprocessing for your large language model (LLM). Read this article to find out how.