How to Automate Supply Chain Risk Reports: A Guide for Developers

Do you use Python? If so, this guide will help you automate supply chain risk reports using AI Chat GPT and our News API.

ChatGPT has been all over the news lately, quickly becoming one of the most well-known large language models (LLMs). LLMs are deep learning models that can generate textual content —e.g., summaries, essays, and answers to questions — based on what they’ve learned from textual data. Other LLMs you’ve probably heard of include Deepmind’s Gopher, Google’s BERT and T5, and Microsoft’s Turing NLG. All these models require massive volumes of high-quality and diverse web data for pre-training. Pre-training means you train the model to do one task to help it form minimal parameters that it can use to solve downstream tasks.

You have two main options for obtaining web data to pre-train your LLM or for language model training: an open-source dataset or a commercial dataset. One of the most popular free sources of web data is Common Crawl (CC). You’ll find very few Common Crawl Competitors. However, other sources of public web data include the Wikipedia database, Hugging Face, and Kaggle.

This post compares Common Crawl and Webz.io data for use in large language models.

Common Crawl is an open-source repository of non-curated web crawl data going back to 2008. It contains petabytes of data obtained by crawling billions of web pages with trillions of links. The Common Crawl dataset is currently stored on Amazon S3 (AWS Open Data), and it’s a popular data source for training language models like GPT-3. The CC organization releases new data files every 1-2 months, and each file usually contains several billion web pages. Some companies today use Common Crawl for language model pre-training, and others use Common Crawl for AI research.

Webz.io is a platform that extracts and formats data from sources across the open, dark, and deep web. We supply this data in the form of structured feeds you can integrate into other platforms using our data feed APIs. The feeds come in JSON, XML, and CSV formats. Our platform provides predefined data verticals, such as news, blogs, forums, and reviews — and nearly all the data goes back to 2008. We also provide data from government websites worldwide.

While both Common Crawl and Webz.io provide massive datasets, the two have many differences:

| Common Crawl | Webz.io | |

|---|---|---|

| Archive time frame | 2008 – present | 2008 – present |

| License | Open source — limited license, but anyone can use the data. Limited commercial use. | Commercial — you can use the data for commercial purposes. |

| Cost | Free to use — anyone can access and use the data provided by Common Crawl without any cost. | Paid service — cost varies, flexible pricing options available. Cost depends on the amount and type of data you need. |

| How data is provided | In large raw files. | Through a RESTful API or Firehose — for easy integration with apps and systems. |

| Data quality | Crawls the entire web, including low-quality and spam websites. Data may not always be accurate or reliable. | Only useful data sites are crawled. We also format, clean, and enrich the data. You get high-quality data with NO noise. |

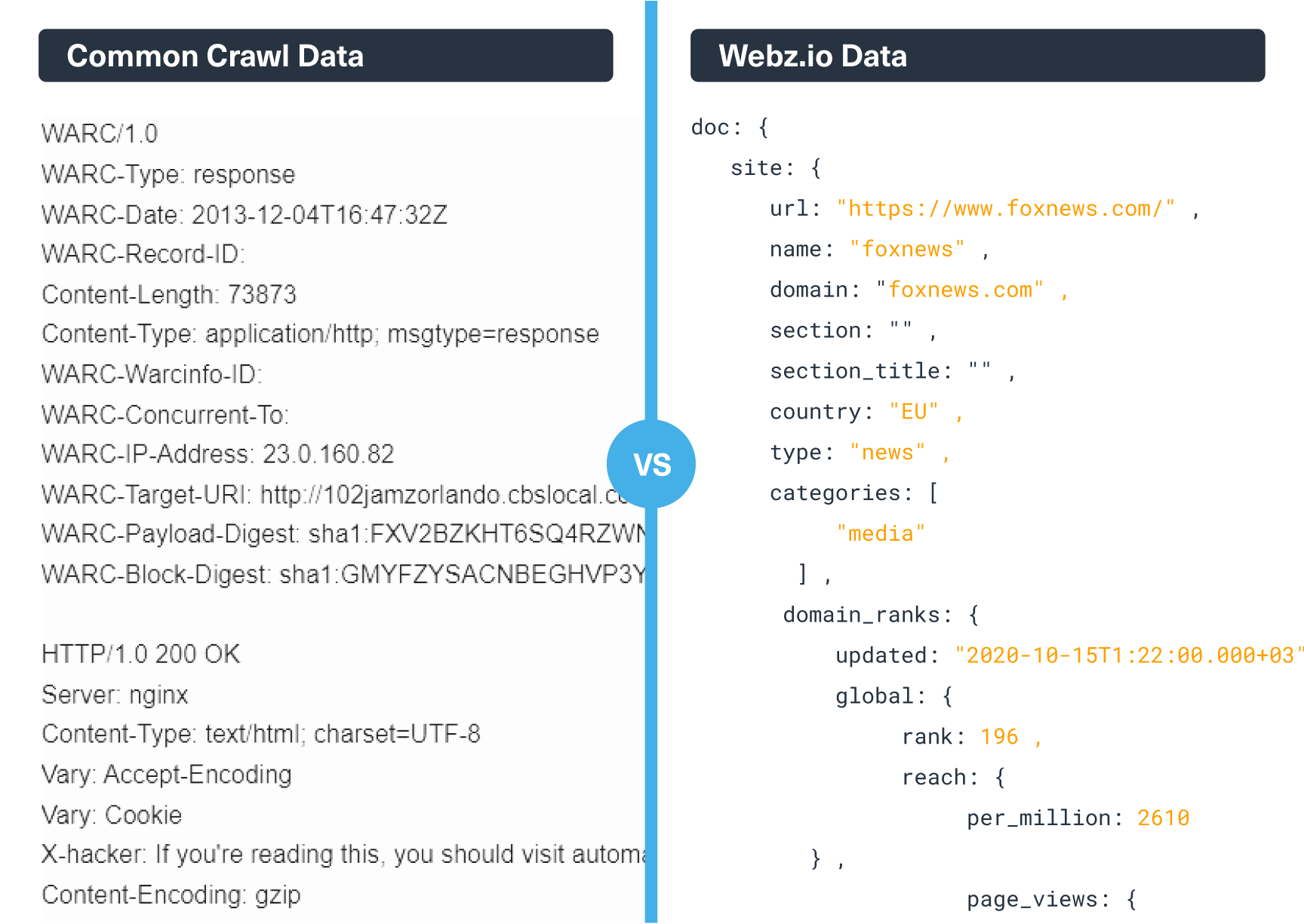

| Data format | Web ARChive (WARC). | Digestible JSON or XML formats. |

| Dataset size and diversity | Crawls billions of web pages from more than 5 billion unique URLs, resulting in a large and diverse dataset. | Provides data from millions of sources. |

| Data structure | Data not structured. The corpus contains raw HTML, raw web page data, extracted plaintext, and metadata extracts. | Advanced web scraping techniques are used to provide cleaned and structured data from HTML. Data does NOT include HTML. |

| Real-time data available | No — Crawls the web regularly. Dataset is updated every month or every few months. | Yes — Webz.io provides real-time data. Users can access the latest information as soon as it becomes available. |

| Content publication date available | No — Common Crawl does not tell you the age of the data or when the web pages were published. The data goes back to 2008, so you can end up with out-of-date information. | Yes — Webz.io provides structured data feeds with numerous fields including publication date. You need this field to put time relevance to the content. |

| Pre-defined verticals | Crawls unclassified HTML pages from all around the web. Data not limited to certain types of sites or provided in sets focusing on specific verticals. | Data available in pre-defined verticals, including news, blogs, forums, and reviews. Dark and deep web verticals are also available. |

| Filtering options | Does not offer any filtering options to help users find specific information. | Users can filter the data based on specific criteria, e.g., language, location, keywords, and sentiment. Helps improve the relevance and quality of the data. |

| Indexing | Provides some indexing but not at a level that makes the data easily searchable. | We index the data using Elasticsearch and manage the storage and indexing infrastructure in-house. |

| Deep and dark web data | Not available — Common Crawl currently focuses on the open web. | Available — Webz.io crawls the entire web — open, dark, and deep. |

| Skills needed to use the data | Requires technical skills or resources to process and analyze the data. | Anyone can use Webz.io data regardless of technical skill. You need developers or engineers to integrate the API. |

| Compute power | You need a lot of compute power to extract relevant data from the raw data files they provide, and it takes a lot of time and resources to make the data usable. | Less compute power needed since crawled data has already been restructured and organized into usable data feeds. |

| Support | Common Crawl has an open community where researchers and developers share their knowledge and experience to help others use the data. | Webz.io offers support to help users navigate their platform and find the information they need. |

| Scalability | Difficult and costly to scale — additional skills and resources needed to scale. | Integrated with APIs, and we manage the service. Webz.io scales automatically to meet your current business needs. |

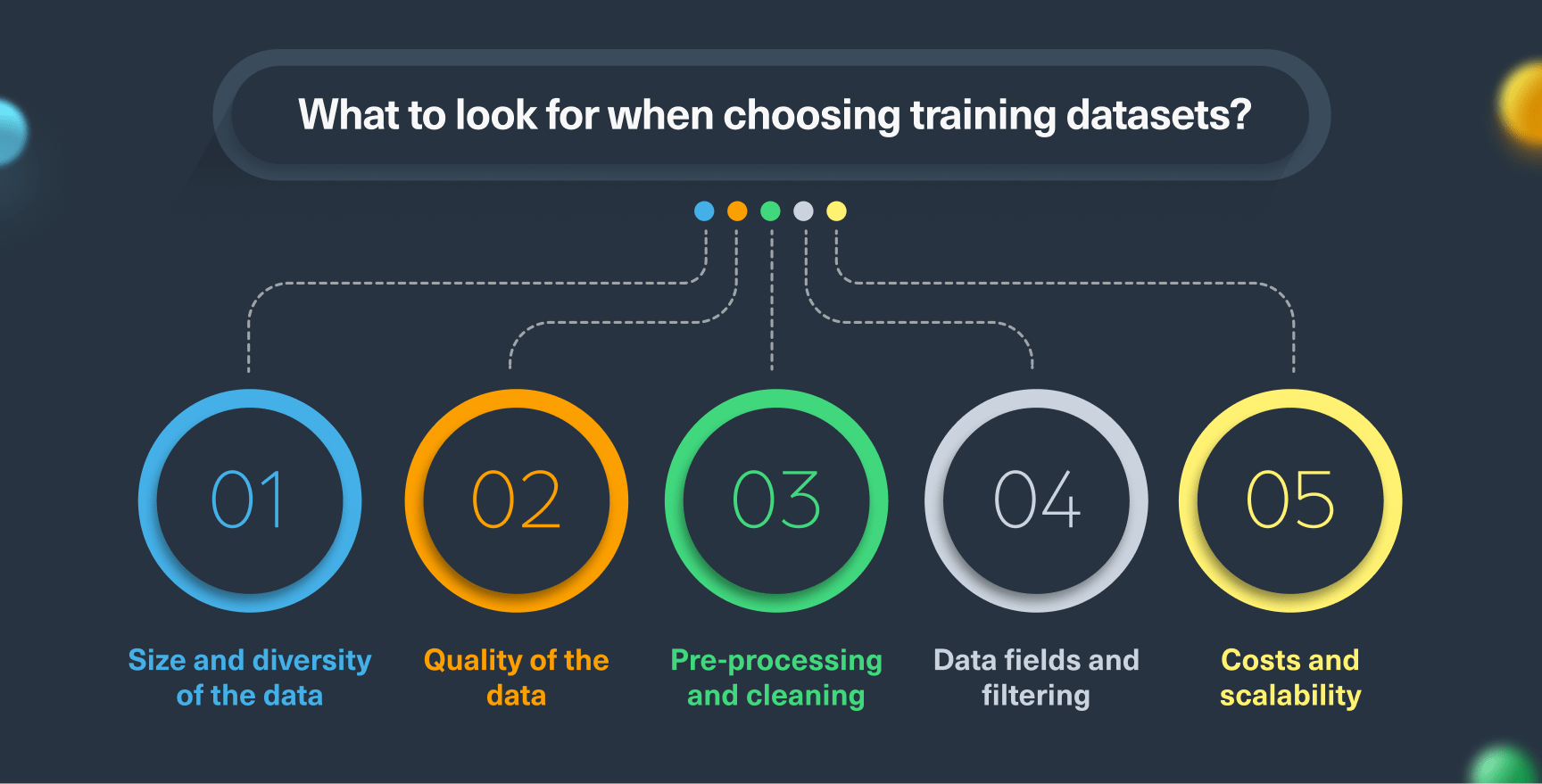

If you’re looking for data to pre-train your language model, you should consider the following:

Common Crawl has a massive repository of web crawl data. However, internet access is unevenly distributed, so Common Crawl data overrepresents younger users from developed countries who primarily speak English. The data lacks the even diversity and representation needed for large language models.

Webz.io also collects data from the internet, but we take steps to streamline and diversify our datasets so that you can perform data analysis as accurately and fairly as possible. We also provide specialized datasets, like Environmental, Social, and Governance (ESG) data. ESG data helps you measure different societal factors, such as equity, diversity, and inclusion. Recent research by Microsoft has shown that larger models with more diverse and comprehensive pre-training data will perform better at generalizing to multiple downstream tasks, even when you have fewer training examples.

You get a LOT of noise and unwanted content with Common Crawl data, including:

Common Crawl does not provide real-time data. They provide large data files you can download, and the age of the data varies depending on when you download it.

Webz.io, on the other hand, provides real-time and historical datasets. Plus, all the data we collect from the open, deep, and dark web has been cleaned, restructured, enriched, and converted into JSON, XML, and Excel formats. You get NO noise or irrelevant content with our data. Because we structure the data, you get numerous fields which make the data far more useful. For example, we include a “publication date” field which puts a time relevance to the content. Common Crawl does not include this field, and the data goes back to 2008, so you could wind up with outdated information.

You need high-quality data to successfully train large language models — or any AI models for that matter. A recent paper from DeepMind about training compute-optimal large language models says that “speculatively, we expect that scaling to larger and larger datasets is only beneficial when the data is high-quality. This calls for responsibly collecting larger datasets with a high focus on dataset quality.”

Generally, data professionals spend 38% of their time cleaning and preparing the data for AI or ML models. If you use Common Crawl data for your large language model, you’ll likely spend a LOT more of your time cleaning and preparing the data — because it contains so much noise and unwanted content.

With Webz.io, you don’t have to spend time on data cleaning or preparation — we’ve already done that for you. You can skip these steps and go right to creating queries and analyzing the data. While you have to pay to use our data, you don’t have to spend as much time on pre-processing and cleaning as you would with Common Crawl. What you pay in data feeds, you save on data preparation costs.

Common Crawl data comes in large raw data files with very few relevant fields making filtering challenging to do. You have to figure out a method of filtering the data that works best for you. For example, you might create a block list filter to remove the unwanted text or use N-Grams to extract significant portions of the text. You can also find pre-filtered sets of Common Crawl data, like the C4 Colossal Clean Crawled Corpus. These cleaned versions of CC data usually contain a small number of filterable fields, like text, content length, timestamp, content type, and URL.

Fields are handy for filtering data, and the more relevant fields available, the easier and faster you can filter the data and make it usable for your model. Common Crawl data includes the following fields: title, text, URL, crawl timestamp, content type, and content size.

Webz.io structured web data with extracted, inferred, and enriched fields. We identify every source we crawl as a “post,” which is an indexed record matching a specific news article, blog post, or online discussion post or comment. We then extract standard fields from these source types, such as a URL, title, body text, or external links. Our fields break down into three main types:

If you want to filter out specific keywords, languages, and topics from Common Crawl, you need to filter through vast amounts of text and then analyze it, which takes a lot of time and resources. With Webz.io, you can filter the data by the many fields we’ve already extracted. With paid-quality datasets, you can quickly filter down to the relevant data you need for your model or application.

The cost of using Common Crawl for a large language model will vary depending on the:

You need to figure out all the processes and services required for your LLM project and then do some pretty complex calculations. AWS, Google Cloud, and Microsoft Azure provide pricing calculators you can use to figure out the costs of using their cloud services. You also must also factor in the time and costs to clean, prepare, and filter the data.

If you’d like to get an idea of what it could cost to train your LLM using Common Crawl data, check out this blog post by Ben Cottier on LessWrong. 82% of the tokens used to train GPT-3 came from Common Crawl web scraped data. Cottier estimates that the cost of Common Crawl data for GPT-3 from 2016-2019 — which entails scraping the data, hosting the data files, and some human labor — is about $400K.

You’ll still have cloud service and compute costs if you use Webz.io data for your LLM project. But you’ll significantly reduce your costs when it comes to obtaining and processing pre-training data. You may get 10X the documents from Common Crawl than you would Webz.io, but you have to do a lot of work to make that data usable.

Webz.io is a Data as a Service (DaaS) provider that offers APIs to easily integrate our data feeds with your applications and systems. A DaaS allows you to scale the data automatically as your LLM application grows. You need additional skills and resources to scale Common Crawl data as your model or application becomes larger.

You need data to train language models, and that data will cost you. How much it will cost you depends on whether you choose to clean and filter an open-source dataset like Common Crawl or use a noise-free commercial dataset with easily filterable fields. Cleaning, structuring, and filtering are half the battle when generating pre-training sets for LLMs. And with Webz.io, you can win that battle with far less time, effort, and cost.

Want to learn more about how Webz.io can help you create more accurate and relevant models? Fill out this form to schedule a chat with one of our data experts.

Do you use Python? If so, this guide will help you automate supply chain risk reports using AI Chat GPT and our News API.

Use this guide to learn how to easily automate supply chain risk reports with Chat GPT and news data.

A quick guide for developers to automate mergers and acquisitions reports with Python and AI. Learn to fetch data, analyze content, and generate reports automatically.