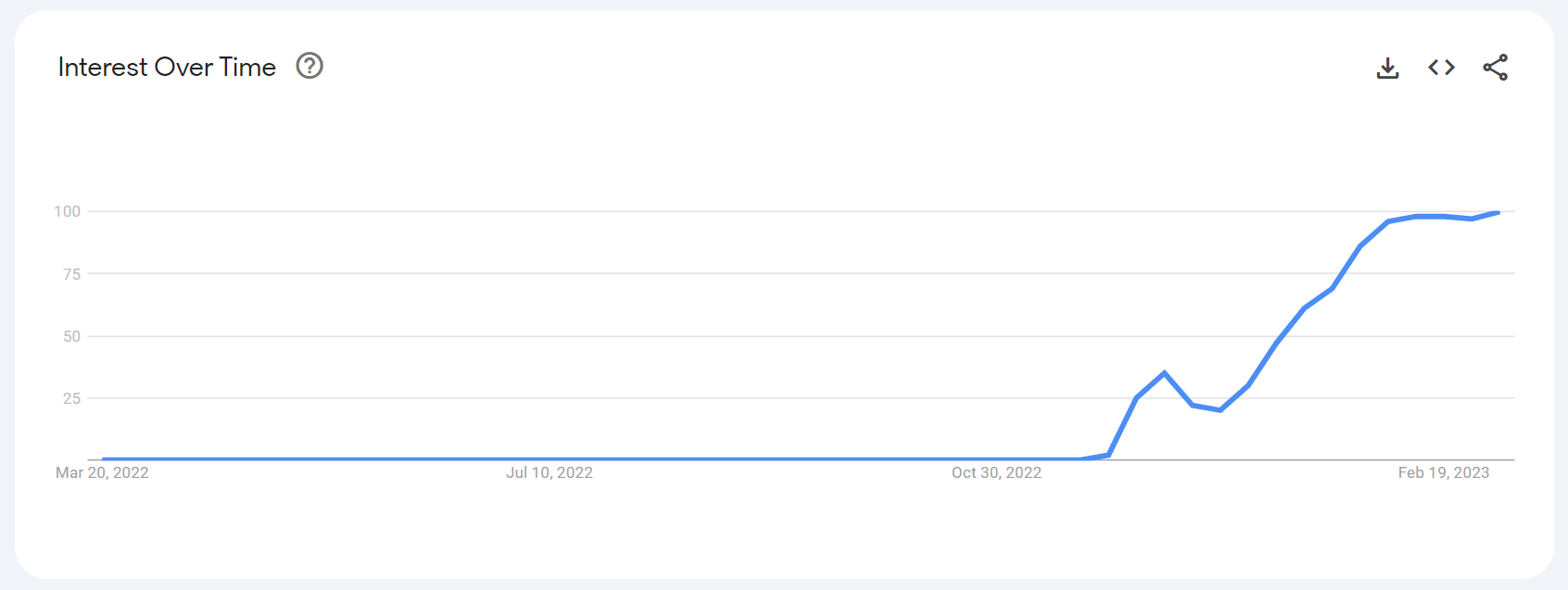

Large language models (LLMs) — we’ve been hearing about them a lot lately. While LLMs have been around for a while now, the November 2022 release of OpenAI’s ChatGPT went viral, launching LLMs into mainstream news. More people than ever before have become interested in these specialized AI models, and we’re seeing a rapidly increasing number of companies developing or using them. Every step of LLM development takes a lot of work and precision, especially data preprocessing. Data preprocessing lays the foundation for every powerful LLM, and you can strengthen that foundation by using high-quality structured web data throughout this process.

What is data preprocessing?

In the context of LLMs, data preprocessing is a process where you take raw or unstructured data and transform it into a format that algorithms can understand, and therefore, will work well for language model training. Most LLM developers use non-curated, open-source web data to train their models. These datasets typically have various problems, such as missing values, junk data, incorrect data types, and data outliers. Models won’t perform well or sometimes at all if you feed them poor-quality training data.

The main challenges of data preprocessing for language models

Preprocessing data for a large language model presents many challenges for those assigned to this task. Most of these challenges have to do with data collection and cleaning.

Data collection

You need to find a LOT of diverse data to train your LLM, and then you need to figure out how to collect it. Many LLM developers use massive web datasets from repositories like Common Crawl (which we’ve reviewed recently) or collect data from large public sites like Wikipedia. Common Crawl does not provide real-time data, and the data provided can be from any time going back to 2008.

Data cleaning

Most publicly available web data is non-curated and unstructured, so you must spend a lot of time cleaning and structuring it before it can work well for language model training. Cleaning the data takes a lot of time and effort because you often run into issues you must fix, such as:

- Noisy data (e.g., spam data, raw HTML, offensive content)

- Duplicate data

- Irrelevant data

- Junk data (e.g., gibberish, boilerplate)

- Inconsistent data (e.g., typos, incorrect spellings)

- Missing values

- Incorrect data types (e.g., float, string, integer)

- Data outliers (extreme data points compared to the rest of the data)

- Not representative (bias)

In general, data professionals spend 38% of their time cleaning and preparing data for model training. If you use data with a wide range of problems, you’ll spend even more time ensuring the data is good enough for training your model.

Google, Microsoft, and OpenAI all have teams spending many hours preprocessing data for their LLMs. However, these LLMs still produce unpredictable and inaccurate results at times.

Big-tech LLM errors make the news

LLMs have been all over the news lately because they are fantastic pieces of technology and because they often make factual errors or even hallucinate. When referring to LLMs, a hallucination is where the output of the model is not supported by the training data or the model essentially “makes things up.” LLMs from Google, Microsoft, and OpenAI have all made the news because of factual errors:

OpenAI’s ChatGPT

A recent Search Engine Journal article highlights how ChatGPT failed to answer questions about FIFA correctly. The two questions are, “how many times did Argentina win the FIFA World Cup?” and “which team won the FIFA World Cup in 1986?. A Fast Company article covers how ChatGPT provided a different incorrect answer when asked the same question several times, “What was the first TV cartoon?”

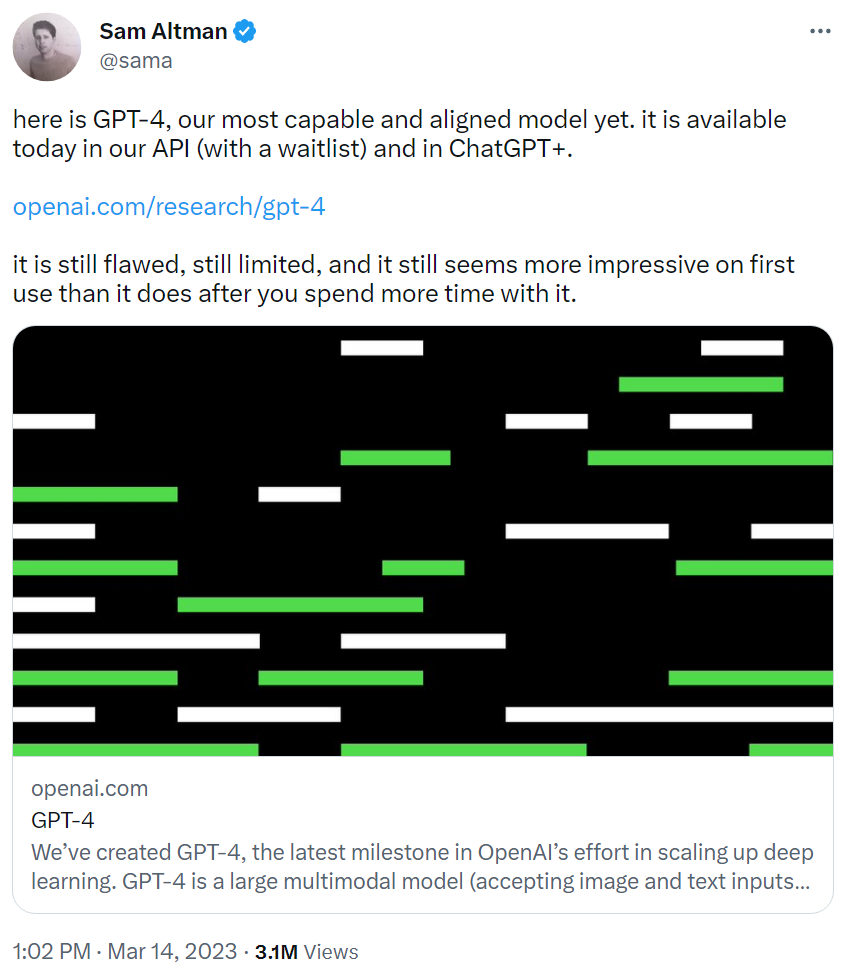

On March 14, 2023, OpenAI announced GPT-4, a large multimodal model (LMM) that accepts image and text inputs, outputting text. The company has made text input capability available via ChatGPT and API.

Google’s Bard

Google’s Bard recently made the news because a GIF posted on Twitter promoting the LLM includes incorrect information to the question, “what new discoveries from the James Webb Space Telescope can I tell my 9-year old about?” Multiple reports say that Alphabet shares dropped in value shortly after the Bard Twitter advertising incident, the company losing an estimated $100 billion in market value. At the time of this writing, Bard is not generally available.

Microsoft’s Bing AI

In February 2023, Microsoft announced that Bing is now running on a next-generation OpenAI large language model that borrows elements from ChatGPT and GPT-3.5. The first AI-powered Bing demo outputted several errors related to financial information for Gap clothing and Lululemon. The LLM powering Bing has made additional errors. For example, a user on Reddit argued with Bing AI about the current year — Bing AI insists the year is 2022 and not 2023.

The same day OpenAI announced GPT-4, Microsoft confirmed that the new Bing runs on GPT-4. The company has customized this new LMM for search, and you can sign up for the waiting list to preview the new Bing. Only time will tell if this new model improves the accuracy of Bing’s responses to user inputs.

Why do LLMs provide incorrect information at times?

In general, LLMs sometimes provide wrong answers or hallucinate because of problems with the training data.

We don’t know all the details about the training data used for these LLMs. However, at the time of this writing, we know that versions of ChatGPT released before March 2023 largely rely on web datasets from Common Crawl and the data cuts off in 2021. We also know that the training data for GPT-4 ends in September 2021. And according to OpenAI’s own blog post, this new model still “hallucinates facts and makes reasoning errors.” Although it reduces hallucinations compared to previous versions.

Bard was trained on data from a dataset named Infiniset, and the cutoff date for this data is unclear. Little is known about the data used to train the LLM for Bing AI. However, a The Verge article says that the LLM for Bing AI has internal knowledge and information that cuts off sometime in 2021.

If the data used to train an LLM cuts off in 2021, it can’t answer questions requiring recent information correctly. For example, Argentina won the World Cup in December 2022, but ChatGPT doesn’t know that because of the training data cutoff date. The quality of the training data also impacts the performance of LLMs. We don’t have insights into the preprocessing pipelines for Bard, Bing AI, and ChatGPT. However, all the recent reports of errors and hallucinations these LLMs have produced tell us the training data could stand for some improvement. And the best way to improve the training data is to incorporate structured web data into the data preprocessing pipeline.

Structured web data feeds can help you speed up data collection and improve preprocessing

Webz.io provides structured web data feeds that help reduce many of the problems that arise with data cleaning. We provide our feeds through a RESTful API or Firehose, which makes it easy to collect and integrate the data with models and applications. When you use structured web data, you can speed up preprocessing because you don’t have to spend as much time fixing problems with the data. You can also quickly filter the data so that you can train your model with high-quality, relevant data.

| Problems | How Webz.io structured web data feeds help |

| Difficult to collect web data | We provide our data through a RESTful API or Firehose — You only need to plug one of them into your application or system. We also make our data available in pre-defined verticals, including news, blogs, forums, and reviews. Dark and deep web verticals are also available. |

| Junk data, inconsistent data, missing values, incorrect data types | We’ve already cleaned and structured the data, so you don’t have to spend time fixing these data issues. |

| Noisy data | We only crawl useful data sites — you won’t see offensive content, data from spam websites, raw HTML and code, boilerplate text, or lorem Ipsum text. You don’t have to spend time removing unwanted content. |

| Data outliers | In most cases, we clean the data to eliminate outliers, and you can choose to get higher accuracy if required. |

| Duplicate data | We will only index the same URL once. However, the exact text on different domains can still be present. If you use our data from online discussion forums, you can use a filter to exclude duplication of the text of the original posts that could sometimes appear in the responses (on the same thread). Note: If you use Webz.io along with other sources, you’ll need to de-duplicate on your end. |

| Irrelevant data | You can filter the data based on specific criteria, e.g., language, location, keywords, and sentiment. Filtering helps improve the relevance and quality of the data. |

| Out-of-date data | We provide real-time data. Users can access the latest information as soon as it becomes available. We also offer historical web data feeds. |

| Not representative (bias) | We provide web data from domains worldwide. It’s easier to reduce bias in your model if you leverage clean data from more domains and diverse datasets. |

Data preprocessing can make or break your LLM

The data you feed your LLM for training impacts its performance. If you train your model with flawed data, it can cause the model to produce inaccurate or unexpected results. However, if you give it high-quality training data, you’ll see a better performance. Preprocessing is the step in the LLM development process that determines whether your model will succeed or fail — and the data you choose to use during this step makes a huge difference.

Want to learn more about how structured web data can help you optimize and scale data preprocessing for large language model training? Schedule a chat with one of our web data experts.