Is ChatGPT Abused on the Dark Web?

ChatGPT, the AI chatbot launched by OpenAI in November 2022, has drawn a lot of attention worldwide. Its ability to carry out natural language-generated tasks with a high level of accuracy caused a great deal of excitement. Yet alongside the buzz it has generated, it has also gauged the interest of the security and cybersecurity industries who are now looking into the potential threats ChatGPT and other similar platforms may hold.

ChatGPT and cyber threats

Since its launch, ChatGPT has caused a stir within the ever-evolving cyber threat landscape with many fearing its code generation capabilities can help less-skilled threat actors effortlessly launch cyberattacks.

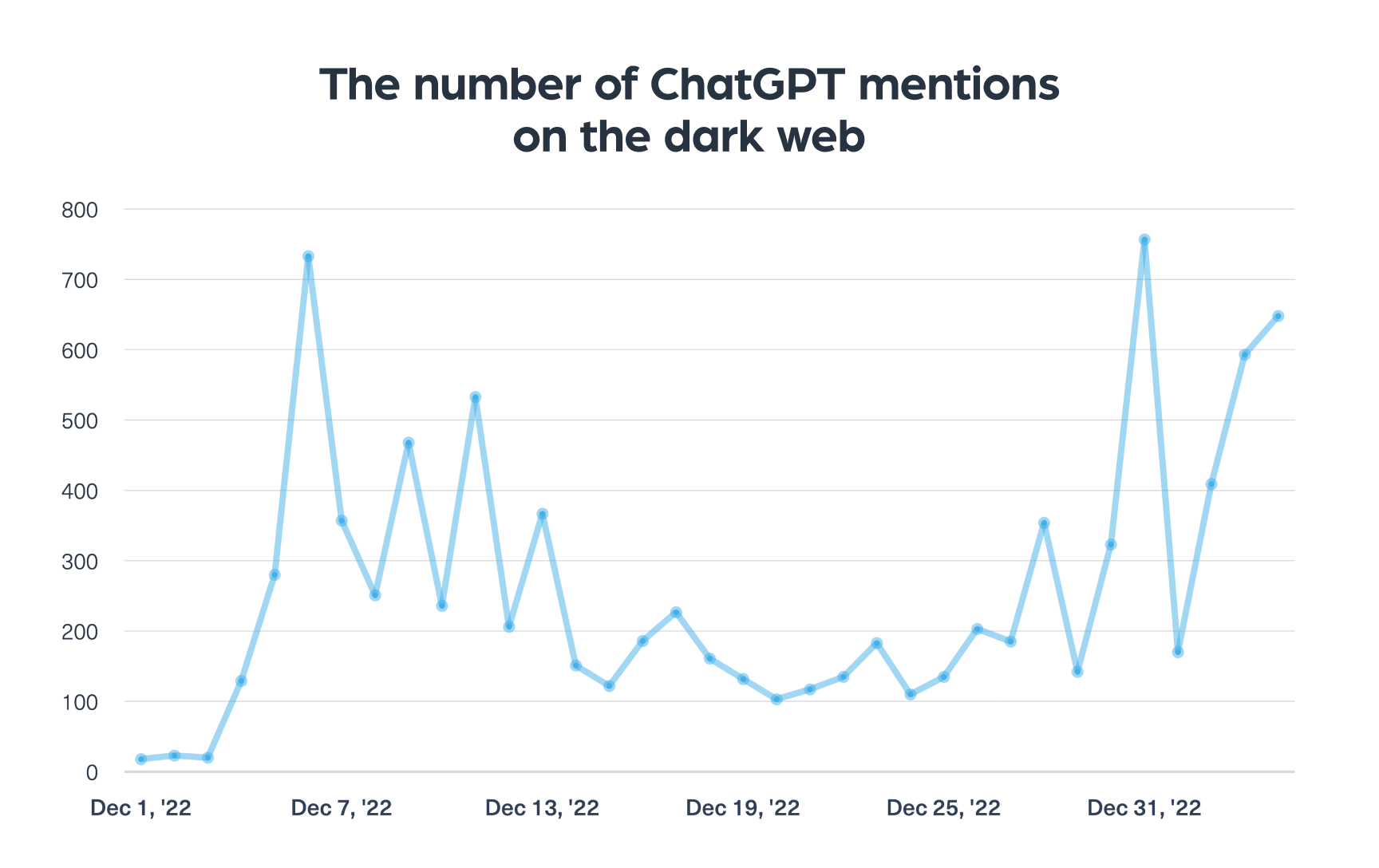

We decided to see whether the attention ChatGPT received on the open web could also be seen on the dark web. By using our dark web data, we monitored the number of ChatGPT mentions on the deep and dark web over the past two months.

As you can see in the chart below, there has been an apparent increase in the number of ChatGPT mentions since early January:

The first spike in the number of mentions of ChatGPT on the dark web was in December. This is an indication that some dark web users started discussing it, which is not surprising considering they are usually news savvy and keep up with global cyber trends.

After a small plateau, you can see a significant spike, which indicates an emerging interest in ChatGPT within the cybercriminal community.

How can ChatGPT be used by cybercriminals for malicious activity?

The dark web is used daily by cybercriminals to discuss and share information about malicious activity. When we took a look at the data, we found an indication that ChatGPT was used for malicious purposes.

Generating malicious code

ChatGPT can be used to generate code supporting malicious activity. It can be used for:

- The creation of malicious software, such as malware and other trojans, which are used to hack and compromise computer systems, as can be seen in example #2 below

- Building dark web platforms which are used by threat actors to host illegal activity, including complex decentralized marketplaces

Social engineering

ChatGPT can be used to generate personalized messages to trick users into making security mistakes or giving away sensitive information.

This manipulation made for malicious activities is called social engineering. One of the most popular ChatGPT-based social engineering attacks, as it emerged from the dark web, is the honeypot scam, as you can see in example #1 below.

Phishing scams

Many tutorials have been published on the dark web and on alternative social media, including guides on how to use ChatGPT to generate convincing phishing emails or messages to trick people into downloading malicious software or divulging their PII. While the creation of phishing tools is less common at the moment, it has been discussed on dark web platforms as well.

Identifying actual vulnerabilities

ChatGPT could potentially also be used to detect security vulnerabilities by providing it with a piece of code to test. This vulnerability can be exploited by a cyber criminal to gain unauthorized access to a computer system.

Now let’s take a look at real-life dark web mentions of ChatGPT within cybercriminal discussions.

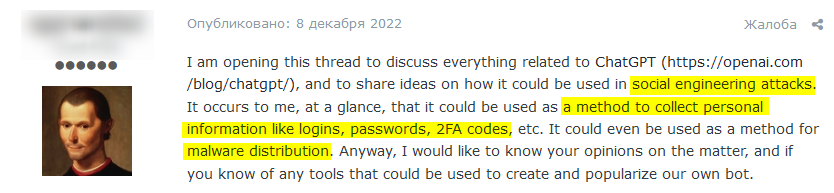

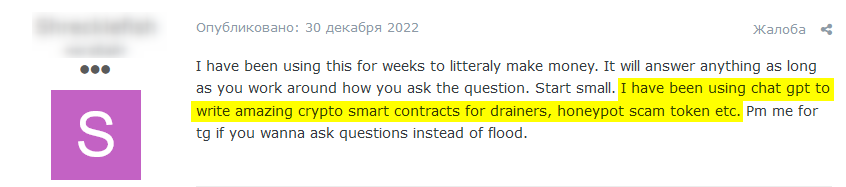

Example #1: Fraud discussions

You can see an example of social engineering threats in the following thread, published on the popular underground forum Dread:

This thread centered around sharing ideas about methods to carry out social engineering attacks by using chatGPT. The user who started this thread was looking for relevant techniques for stealing login information and the spread of malware.

One forum member commented on this thread, suggesting to start with seemingly simpler methods he has been using ChatGPT for in order to make money. The first mentioned ChatGPT-based scams were crypto drainers, which are malicious actors or software programs that look to steal cryptocurrencies from individual or institutional wallets with various fraudulent techniques.

The second method that was mentioned is honeypot crypto which refers to fraudulent schemes where hackers lure unsuspecting victims into depositing funds into a fake investment platform with the promise of high returns:

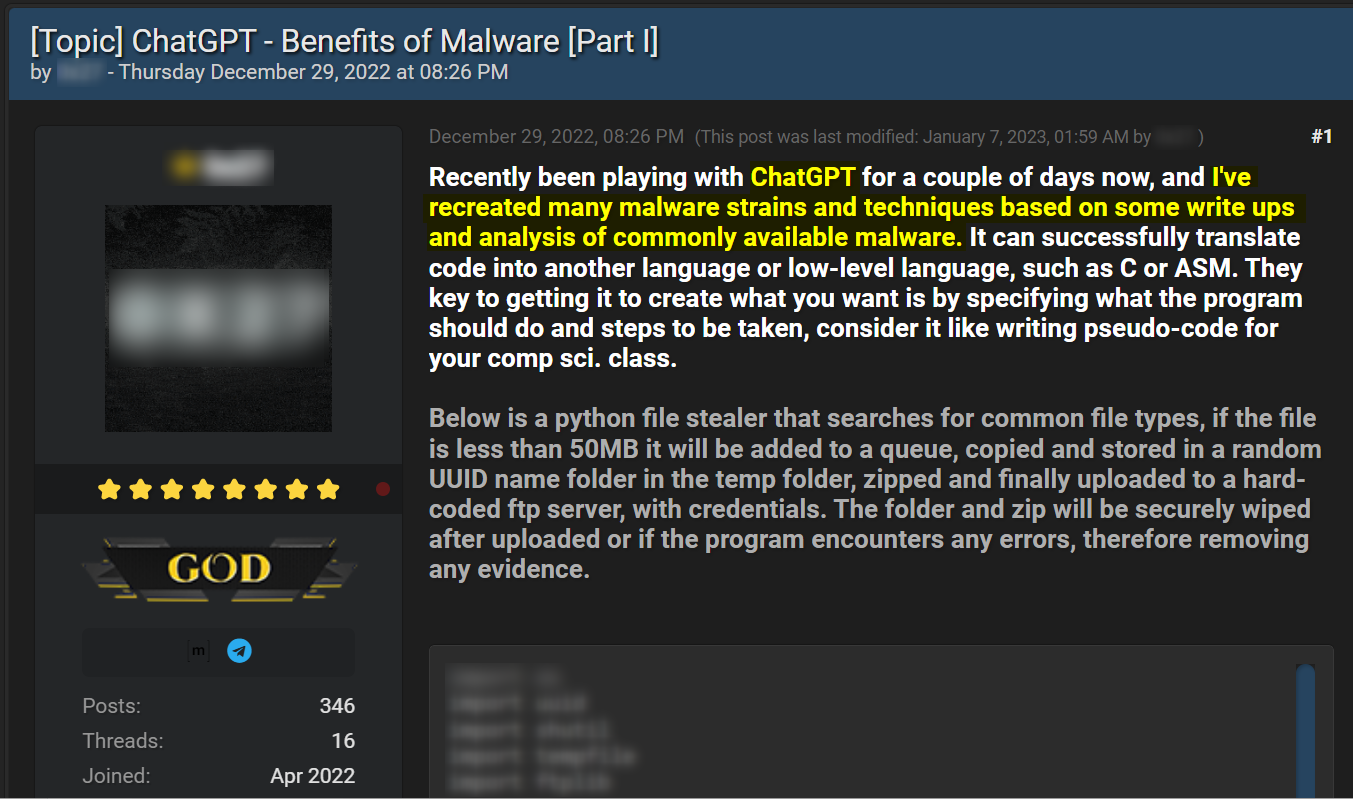

Example #2: Generation of malicious content

An example of the use of ChatGPT for creating malicious content can be seen below in a threat titled “ChatGPT – Benefits of Malware” which appeared on BreachedForums on December 29, 2022. The author of the thread revealed that he was experimenting with ChatGPT to generate malware strains and techniques described in research publications and write-ups about common malware.

To illustrate his point, he shared the code of a Python-based stealer, which is a malicious trojan that steals sensitive information, such as usernames and passwords, from victims:

ChatGPT and cybercriminals – what’s next?

The ease of use of ChatGPT and its capacity to be adapted for malicious purposes means that cybercriminals are very likely to continue using it in the future. The fact that it even allows inexperienced cybercriminals to create and launch cyberattacks easily may mean that we’ll see a greater number of threat actors entering this realm.

While we do not know what the future entails when it comes to ChatGPT and its uses, the potential threats it poses at the moment should concern all organizations and individuals.

For this reason, the comprehensive monitoring of discussions about the malicious use of chatGPT on the dark web is critical for cyber threat intelligence companies.