Sentiment classification is a fascinating use case for machine learning. Regardless of complexity – you need two core components to deliver meaningful results; a machine learning engine and a significant volume of structured data to train that engine.

Last month, we added the new “rating” field for rated review sites covered in the Webz.io threaded discussions data feed. With millions of rated reviews, anyone can access high quality structured datasets that include a natural language string and its respective numerical representation of sentiment classification – the familiar star rating of 1 through 5.

In this blog post, we show you how to collect your own training datasets of rated reviews and use them to train a model classification (we worked with Stanford NLP, but you can use the classification engine that makes sense for your model). For simplicity, any review of 4 stars and above (rating:>4) is assigned a positive sentiment, while 2 and below (rating:<2) is considered negative.

For our demo, we put together five datasets; Two pairs of train/test split 80% / 20% respectively and another test dataset:

- General domain model training dataset (80% subset)

- General domain model test dataset (remaining 20% subset)

- Domain specific training dataset (80% subset)

- Domain specific test dataset (remaining 20% subset)

- Domain specific “blind” dataset never introduced during the training to run the final test

Domain specificity can dramatically improve the results of a sentiment classification engine. For example, a reference to “bugs” in a hotel review is very likely negative. However, a discussion of bugs in a software code review won’t necessarily trigger a negative signal to a sentiment classification engine.

All code samples are freely available on our Sentiment Classifier library on Github. Here’s what you’ll need to set it up yourself:

- Terminal

- Python 2.7 or above

- Java 8

- Webz.io free account TOKEN for 1000 renewable monthly requests

- Webz.io Python SDK

1. Setup

Let’s get the basics taken care of:

Install the Webz.io Python SDK

|

1 2 3 |

$ git clone https://github.com/Buzzilla/Webz-python $ cd Webz-python $ python setup.py install |

Install Apache-Maven and Create a project template:

|

1 2 |

$ cd PROJECT_LOCATION $ mvn archetype:generate -DgroupId=com.Webz.reviewSentiment-DartifactId=review-sentiment -DarchetypeArtifactId=maven-archetype-quickstart-DinteractiveMode=false |

2. Rated Review Dataset Collection

The first component of our code foundation is a python script that uses the Webz-python SDK to collect the rated reviews that will make up our datasets.

The output of this script is a ‘resources’ directory, which will contain the train/test files for our engine.

2.1 Set the project directory via Terminal

|

1 |

$ cd PROJECT_LOCATION/review-sentiment |

2.2 Create the python file which will collect the training/testing data

|

1 |

$ touch collect_data.py |

2.2 Edit the file ‘collect_data.py’ with a Text Editor or an IDE:

2.2.1 First step of the script is to cover our imports (3rd-party modules), so add those imports to the top of the script

|

1 2 3 4 5 6 7 8 9 |

from __future__ import division import os import re import time import Webz |

2.2.2 Initialize the Webz.io SDK with your private TOKEN

|

1 2 3 |

Webz_API_TOKEN = 'YOUR_Webz_API_TOKEN' Webz.config(Webz_API_TOKEN) |

2.2.3 Set the relative location of the train/test files

|

1 |

resources_dir = './src/main/resources' |

2.2.4 Build the generic function that will get the necessary data for us from Webz.io, after getting the data the function will create the relevant files inside the ‘resources’ directory.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

def collect(filename, query, limit, sentiment, partition): lines = set() # Collect the data from Webz.io with the given query up to the given limit response = Webz.search(query) while len(response.posts) > 0 and len(lines) < limit: # Go over the list of posts returned from the response for post in response.posts: # Verify that the length of the text is not too short nor too long if 1000 > len(post.text) > 50: # Extracting the text from the post object and clean it text = re.sub(r'(\([^\)]+\)|(stars|rating)\s*:\s*\S+)\s* |

2.2.4 Build the queries for the relevant data, and create the files.

Add the ‘__main__’ section of the code, in every call for the ‘collect()’ function, we are passing the filename we want the train/test files to be called, the actual query to Webz.io for the specific data, the limit of lines of text we want to proccess and save, the sentiment class (positive/negative) for the current query and the partition of the recieved data between the train and the test file (80%/20% train/test split)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

if __name__ == '__main__': # Create the resources directory if not exists if not os.path.exists(resources_dir): os.makedirs(resources_dir) # Get reviews from various sources for training and testing the general classifier, overall of 400 lines, # split the lines 80%/20% between the general.train file and the general.test file collect('general', 'language:english AND rating:>4 -site:booking.com -site:expedia.*', 400, 'positive', 4/5) collect('general', 'language:english AND rating:<2 -site:booking.com -site:expedia.*', 400, 'negative', 4/5) # Get reviews from booking.com for training and testing the domain-specific classifier, overall of 400 lines, # split the lines 80%/20% between the booking.train file and the booking.test file collect('booking', 'language:english AND rating:>4 AND site:booking.com', 400, 'positive', 4/5) collect('booking', 'language:english AND rating:<2 AND site:booking.com', 400, 'negative', 4/5) # Get reviews from expedia.com for a later tests, overall of 300 lines all lines will be saved on the expedia.test collect('expedia', 'language:english AND rating:>4 AND site:expedia.com', 300, 'positive', 0) collect('expedia', 'language:english AND rating:<2 AND site:expedia.com', 300, 'negative', 0) |

2.3 Finally let’s run the script from the Terminal to collect the data and create the files:

|

1 |

$ python PROJECT_LOCATION/review-sentiment/collect_data.py |

- Build the classifier models

We can now build 2 classifier models with the collected datasets above. For this demonstration we chose the stanford-nlp classifier. In this case our two identified classes were: Positive and Negative, and the respective strings of text.

The classification project is going to be written in java using maven, so let’s open the project and start working.

3.1 Get into the project directory via Terminal

|

1 |

$ cd PROJECT_LOCATION/review-sentiment |

3.2 Add the project Dependencies (3rd-party packages), by adding the following to the file ‘src/main/pom.xml’ under the ‘<dependencies>’ tag

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

<dependency> <groupId>com.google.guava</groupId> <artifactId>guava</artifactId> <version>14.0.1</version> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.17</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.7.7</version> </dependency> <dependency> <groupId>edu.stanford.nlp</groupId> <artifactId>stanford-corenlp</artifactId> <version>3.6.0</version> </dependency> <dependency> <groupId>edu.stanford.nlp</groupId> <artifactId>stanford-corenlp</artifactId> <version>3.6.0</version> <classifier>models</classifier> </dependency> |

3.3 Create a properties file to initiate the classification models.

Let’s create that file for both of our models inside the ‘resources’ directory from stage 2, and save it as review-sentiment.prop.

Copy and paste the following properties and save the file:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

# # Features # useClassFeature=true 1.useNGrams=true 1.usePrefixSuffixNGrams=true 1.maxNGramLeng=4 1.minNGramLeng=1 1.binnedLengths=10,20,30 # # Printing # printClassifierParam=200 # # Mapping # goldAnswerColumn=0 displayedColumn=1 # # Optimization # intern=true sigma=3 useQN=true QNsize=15 tolerance=1e-4 |

3.3 Edit the JAVA code

|

1 |

Edit ‘src/main/java/com/Webz/reviewSentiment/App.java’ |

3.3.1 Imports

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

import com.google.common.io.Resources; import edu.stanford.nlp.classify.Classifier; import edu.stanford.nlp.classify.ColumnDataClassifier; import edu.stanford.nlp.ling.Datum; import edu.stanford.nlp.objectbank.ObjectBank; import java.io.IOException; import java.text.NumberFormat; |

3.3.2 Declare the stanford-nlp ‘Column Data Classifier’ class variable inside the ‘App’ class that was generated

|

1 |

private static ColumnDataClassifier cdc; |

3.3.3 Create the ‘getSentimentFromText’ function to retrieve text and a classifier object that returns the sentiment class of the given text

|

1 2 3 4 5 6 7 |

private static String getSentimentFromText(String text, Classifier<String,String> cl) throws Exception { Datum<String, String> d = cdc.makeDatumFromLine("\t" + text); return cl.classOf(d); } |

3.3.4 Create the ‘setScore’ function which retrieves a test file and a classifier object and returns the precision, recall and F1-score for both positive and negative classes

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 |

private static String setScore(String testFileName, Classifier<String,String> cl) { String results = ""; // Calculate the score of 'positive' class int tp = 0; int fn = 0; int fp = 0; for (String line : ObjectBank.getLineIterator(Resources.getResource(testFileName).getPath(), "utf-8")) { try { Datum<String, String> d = cdc.makeDatumFromLine(line); String sentiment = getSentimentFromText(line.replace(d.label()+"\t", ""), cl); // true-positive if (d.label().equals("positive") && sentiment.equals("positive")) { tp++; } // false-positive else if (d.label().equals("positive") && sentiment.equals("negative")) { fp++; } // false-negative else if (d.label().equals("negative") && sentiment.equals("positive")) { fn++; } } catch (Exception e) { e.printStackTrace(); } } NumberFormat percentFormatter = NumberFormat.getPercentInstance(); percentFormatter.setMinimumFractionDigits(1); double precision = (double)tp/(double)(tp+fp); double recall = (double)tp/(double)(tp+fn); results += "\nPositive Results:\n"; results += "Precision: " + percentFormatter.format(precision) + "\n"; results += "Recall: " + percentFormatter.format(recall) + "\n"; results += "F1: " + (2*precision*recall)/(precision+recall) + "\n"; // Calculate the score of 'negative' class tp = 0; fn = 0; fp = 0; for (String line : ObjectBank.getLineIterator(Resources.getResource(testFileName).getPath(), "utf-8")) { try { Datum<String, String> d = cdc.makeDatumFromLine(line); String sentiment = getSentimentFromText(line.replace(d.label()+"\t", ""), cl); // true-positive if (d.label().equals("negative") && sentiment.equals("negative")) { tp++; } // false-positive else if (d.label().equals("negative") && sentiment.equals("positive")) { fp++; } // false-negative else if (d.label().equals("positive") && sentiment.equals("negative")) { fn++; } } catch (Exception e) { e.printStackTrace(); } } percentFormatter.setMinimumFractionDigits(1); precision = (double)tp/(double)(tp+fp); recall = (double)tp/(double)(tp+fn); results += "\nNegative Results:\n"; results += "Precision: " + percentFormatter.format(precision) + "\n"; results += "Recall: " + percentFormatter.format(recall) + "\n"; results += "F1: " + (2*precision*recall)/(precision+recall) + "\n"; return results; } |

3.3.5 Create the ‘main’ function which initiates the general and the domain-specific machine and tests their score with the hotels input, and print the results

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

public static void main( String[] args ) throws IOException { // Constructing the ColumnDataClassifier Object with the properties file cdc = new ColumnDataClassifier(Resources.getResource("review-sentiment.prop").getPath()); // Declare and Construct the General Classifier with the general train file Classifier<String,String> generalCl = cdc.makeClassifier(cdc.readTrainingExamples(Resources.getResource("general.train").getPath())); // Declare and Construct the Domain-Specific Classifier with the general train file Classifier<String,String> hotelsCl = cdc.makeClassifier(cdc.readTrainingExamples(Resources.getResource("booking.train").getPath())); // General Classifier self test (using the 20% data-set from various sources) System.out.println("General Classifier stats:"); System.out.println(setScore("general.test", generalCl)); System.out.println(); // Domain-Specific Classifier self test (using the 20% data-set from booking.com) System.out.println("Domain-Specific Classifier stats:"); System.out.println(setScore("booking.test", hotelsCl)); System.out.println(); // Compare both of the classifiers with the estranged data-set (using the data from expedia.com) System.out.println("Comparison Results:"); System.out.println("General Classifier score:"); System.out.println(setScore("expedia.test", generalCl)); System.out.println(); System.out.println("Domain-Specific Classifier score:"); System.out.println(setScore("expedia.test", hotelsCl)); System.out.println(); } |

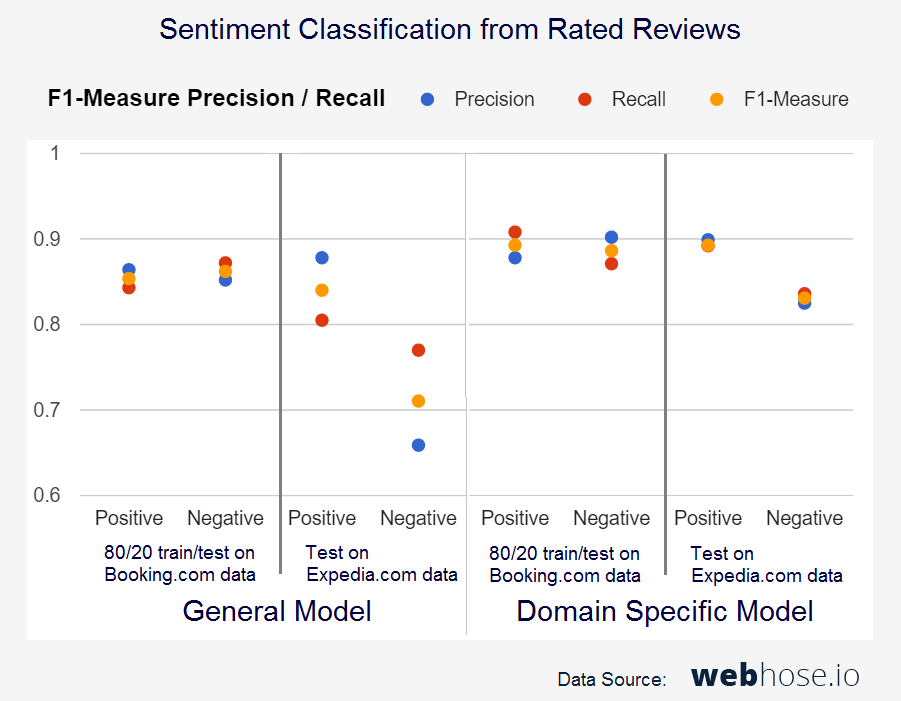

4. Evaluating Performance and Results

We can evaluate the performance of each model using the F1-Score method (essentially a harmonic average of precision and recall) for positive and negative sentiment classification produced by each model.

4.1. Test the score of each model using expedia.test dataset.

4.2. View the results and evaluate

As expected, the results clearly show that the domain-specific model generated by rated reviews of hotels delivers more precise performance.

| General model 80/20 train/test on Booking.com data |

Precision | Recall | F1-Measure | |

| Positive | 0.864 | 0.843 | 0.8536585366 | |

| Negative | 0.852 | 0.872 | 0.8620689655 | |

| General Model test on Expedia.com data |

||||

| Positive | 0.878 | 0.805 | 0.8400520156 | |

| Negative | 0.659 | 0.77 | 0.7105882353 | |

| Domain specific model 80/20 train/test on Booking.com data |

||||

| Positive | 0.878 | 0.908 | 0.8926553672 | |

| Negative | 0.902 | 0.871 | 0.8862275449 | |

| Domain specific Model test on Expedia.com data |

||||

| Positive | 0.899 | 0.892 | 0.8926553672 | |

| Negative | 0.825 | 0.836 | 0.8307692308 | |

|

1 |

, '', post.text.replace('\n', '').replace('\t', ''), 0, re.I) # add the post-text to the lines we are going to save in the train/test file lines.add(text.encode('utf8')) time.sleep(2) print 'Getting %s' % response.next # Request the next 100 results from Webz.io response = response.get_next() # Build the train file (first part of the returned documents) with open(os.path.join(resources_dir, filename + '.train'), 'a+') as train_file: for line in list(lines)[:int((len(lines))*partition)]: train_file.write('%s\t%s\n' % (sentiment, line)) # Build the test file (rest of the returned documents) with open(os.path.join(resources_dir, filename + '.test'), 'a+') as test_file: for line in list(lines)[int((len(lines))*partition):]: test_file.write('%s\t%s\n' % (sentiment, line)) |

2.2.4 Build the queries for the relevant data, and create the files.

Add the ‘__main__’ section of the code, in every call for the ‘collect()’ function, we are passing the filename we want the train/test files to be called, the actual query to Webz.io for the specific data, the limit of lines of text we want to proccess and save, the sentiment class (positive/negative) for the current query and the partition of the recieved data between the train and the test file (80%/20% train/test split)

|

1 |

2.3 Finally let’s run the script from the Terminal to collect the data and create the files:

|

1 |

- Build the classifier models

We can now build 2 classifier models with the collected datasets above. For this demonstration we chose the stanford-nlp classifier. In this case our two identified classes were: Positive and Negative, and the respective strings of text.

The classification project is going to be written in java using maven, so let’s open the project and start working.

3.1 Get into the project directory via Terminal

|

1 |

3.2 Add the project Dependencies (3rd-party packages), by adding the following to the file ‘src/main/pom.xml’ under the ‘<dependencies>’ tag

|

1 |

3.3 Create a properties file to initiate the classification models.

Let’s create that file for both of our models inside the ‘resources’ directory from stage 2, and save it as review-sentiment.prop.

Copy and paste the following properties and save the file:

|

1 |

3.3 Edit the JAVA code

|

1 |

3.3.1 Imports

|

1 |

3.3.2 Declare the stanford-nlp ‘Column Data Classifier’ class variable inside the ‘App’ class that was generated

|

1 |

3.3.3 Create the ‘getSentimentFromText’ function to retrieve text and a classifier object that returns the sentiment class of the given text

|

1 |

3.3.4 Create the ‘setScore’ function which retrieves a test file and a classifier object and returns the precision, recall and F1-score for both positive and negative classes

|

1 |

3.3.5 Create the ‘main’ function which initiates the general and the domain-specific machine and tests their score with the hotels input, and print the results

|

1 |

4. Evaluating Performance and Results

We can evaluate the performance of each model using the F1-Score method (essentially a harmonic average of precision and recall) for positive and negative sentiment classification produced by each model.

4.1. Test the score of each model using expedia.test dataset.

4.2. View the results and evaluate

As expected, the results clearly show that the domain-specific model generated by rated reviews of hotels delivers more precise performance.

| General model 80/20 train/test on Booking.com data |

Precision | Recall | F1-Measure | |

| Positive | 0.864 | 0.843 | 0.8536585366 | |

| Negative | 0.852 | 0.872 | 0.8620689655 | |

| General Model test on Expedia.com data |

||||

| Positive | 0.878 | 0.805 | 0.8400520156 | |

| Negative | 0.659 | 0.77 | 0.7105882353 | |

| Domain specific model 80/20 train/test on Booking.com data |

||||

| Positive | 0.878 | 0.908 | 0.8926553672 | |

| Negative | 0.902 | 0.871 | 0.8862275449 | |

| Domain specific Model test on Expedia.com data |

||||

| Positive | 0.899 | 0.892 | 0.8926553672 | |

| Negative | 0.825 | 0.836 | 0.8307692308 | |

We put this tutorial together as a high level demonstration of the kind of machine learning models you can train using Webz.io data. You could apply your own models to a wide variety of use cases – business intelligence, cybersecurity, financial analysis, and much more. In fact, we would love to receive feedback from you to learn more about creative use of our data in machine learning models!