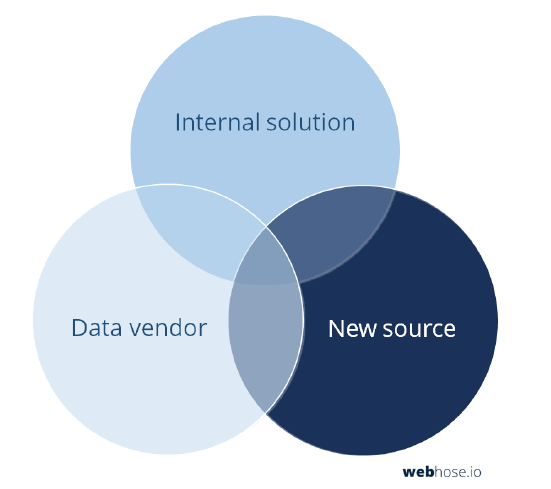

In our new report, we deconstruct the all-too-familiar race to achieve 100% coverage of the web. Data acquisition efforts usually rely on one of three approaches – build an internal web crawling capability, rely on data providers, or implement a combination of both. The goal is to tap into as much structured web data as you can handle, enabling you to filter and consume precisely the data you need on-demand and at scale.

How to prevent missing data alerts

Your insights are only as good as the raw data that powers them. The harsh reality is that even a single missing data point could be perceived as a critical failure. The result is a “coverage race” to satisfy your customers’ expectations of perfect perceived coverage. That means doing everything you can to ensure your effective coverage meets or exceeds expectations.

The challenge then becomes a frustrating initiative to funnel a finite set of resources at an infinitely complex problem. Even if you could cover the entire web, would you really want to? How would you sift through the heaps of data to refine it into usable information, knowledge, and wisdom? Answering these questions is an ongoing technological challenge that is driving phenomenal growth of big data analytics solutions.

Scalable web data consumption

Suppose the coverage delivered by the internal crawling solution overlaps with roughly 10% of your existing data vendor’s coverage. It makes little sense to add a new data source for a coverage increase of the same order if it means doubling your data acquisition budget. But when economies of scale come into play, the picture changes dramatically.

The on-demand model delivers that same improvement at a fraction of your existing budget without eroding your existing coverage. Since most customers require just a small subset of the surface web, the emerging category of on-demand data consumption delivers unprecedented scalability. That means you can invest as little as $50 per month to improve your effective coverage dramatically.